Our research interests are in Inference, Modeling, Control, and Learning applied to Robotic Manipulation. We’re interested in the complex and exciting world of physical interactions. Below, you can find some of our current project outlines:

Click the title to read more about our work on language-conditioned and contact-rich tool manipulation policy. We show a generalizable and transferable manipulation framework for controlling and planning interactions between tool and the environment.

TactileVAD: Geometric Aliasing-Aware Dynamics for High-Resolution Tactile Control

Click the title to read more about our work on touch-based control for objects with geometric aliasing. Our method introduces a novel tactile benchmarking task: the tactile cartpole.

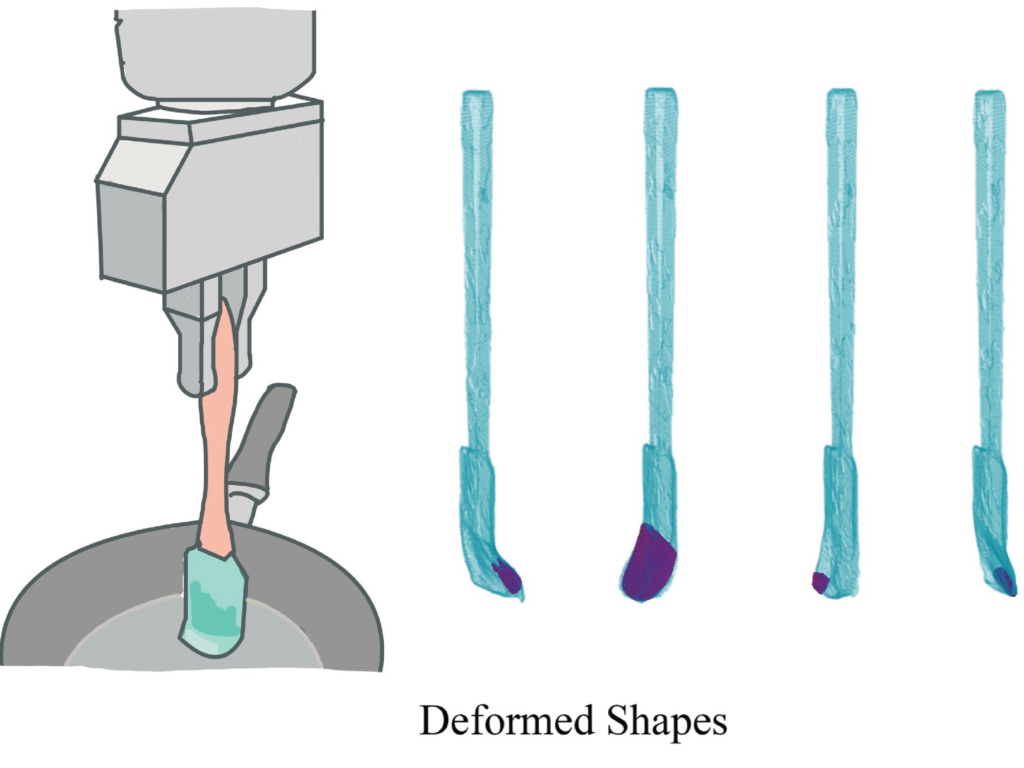

Integrated Object Deformation and Contact Patch Estimation from Visuo-Tactile Feedback

Click the title to read more about our work jointly estimating how a tool deforms and contacts various shapes, both in simulation and on a physical robot system. Our proposed representation uses implicit neural fields to represent both the deforming tool geometry and contact interface between tool and environment.

MultiSCOPE: Disambiguating In-Hand Object Poses with Proprioception and Tactile Feedback

Click the title to read more about our work on in-hand object pose estimation with a bimanual robot system. The robots use multiple contact actions along with proprioception and tactile feedback to estimate the pose of each tool.

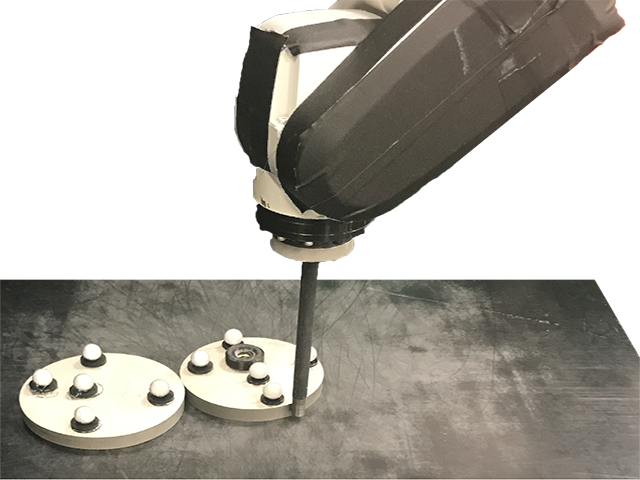

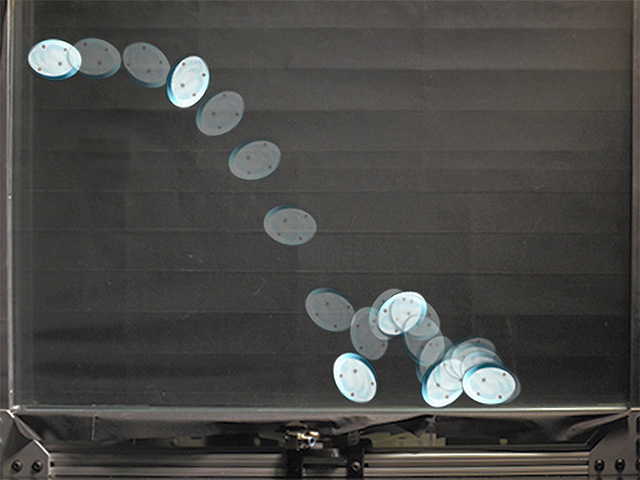

Precise Object Sliding with Top Contact via Asymmetric Dual Limit Surfaces

Click the title to read more about our work on mechanics and planning algorithms to slide an object on a horizontal planar surface via frictional patch contact made with its top surface.

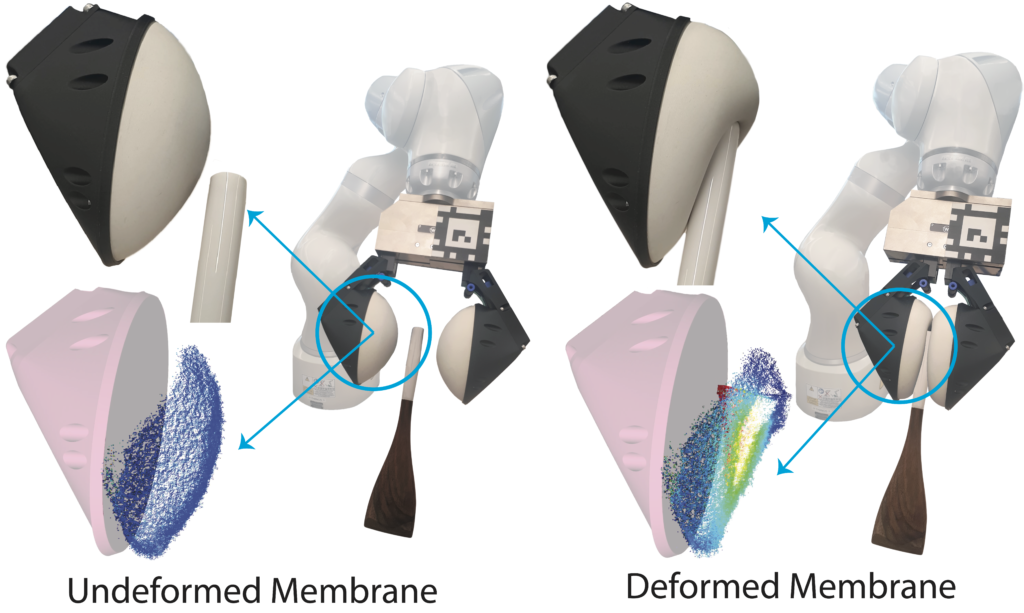

Manipulation via Membranes: High-Resolution and Highly Deformable Tactile Sensing and Control

Click the title to read more about our work on object manipulation and tool use with deformable high-resolution membrane sensors. The robot learns the dynamics of the deformable membrane use them to control the pose of grasped objects and transmitted forces to the environment.

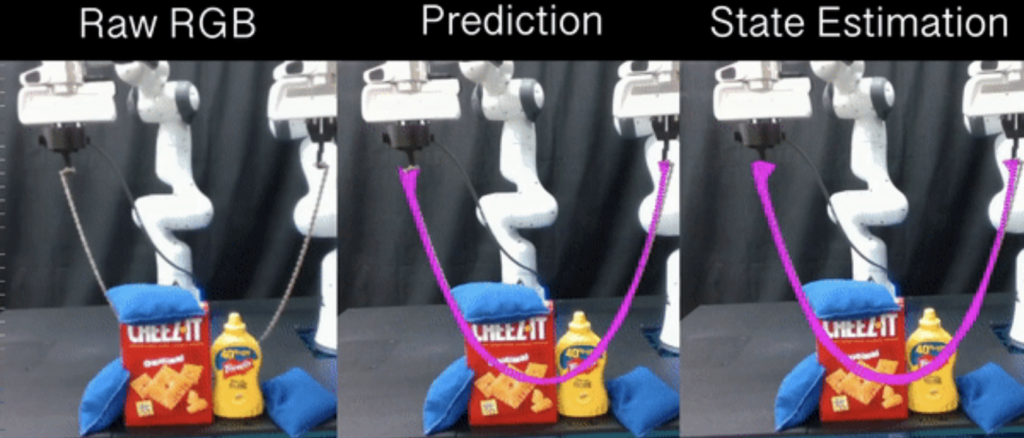

VIRDO++: Real-World, Visuo-Tactile Dynamics and Perception of Deformable Objects

Click the title to read more about our novel approach for, and real-world demonstration of, multimodal visuotactile state-estimation and dynamics prediction for deformable objects.

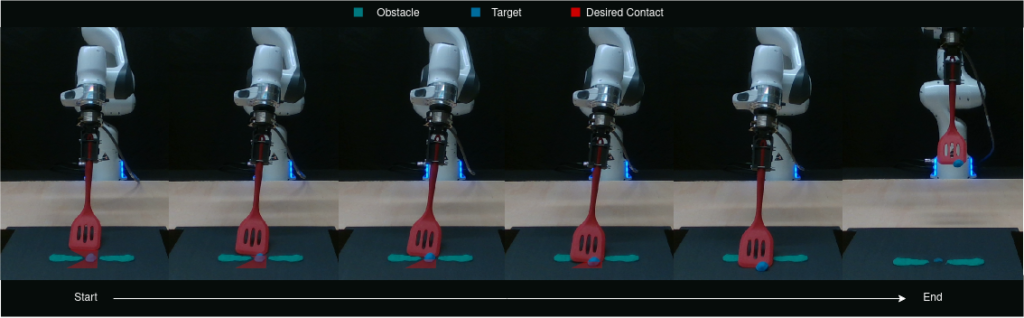

Learning the Dynamics of Compliant Tool-Environment Interaction for Visuo-Tactile Contact Servoing

Click the title to read more about our work representing and controlling extrinsic contact between a compliant tool and the environment, including in the presence of obstacles.

Visuo-Tactile Transformers for Manipulation

Click the title to read more about our work doing multi-modal reinforcement learning in simulated and real world environments with transformer networks.

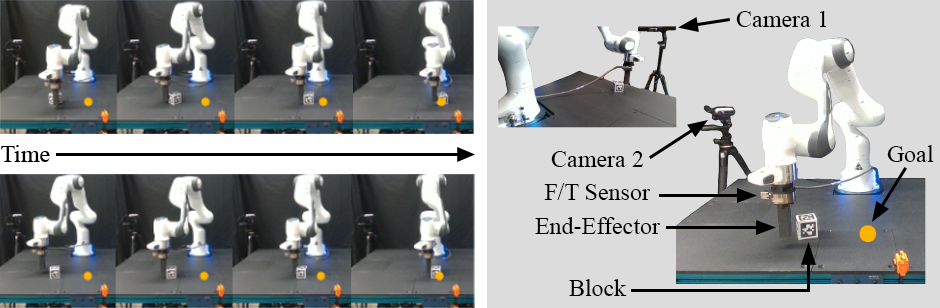

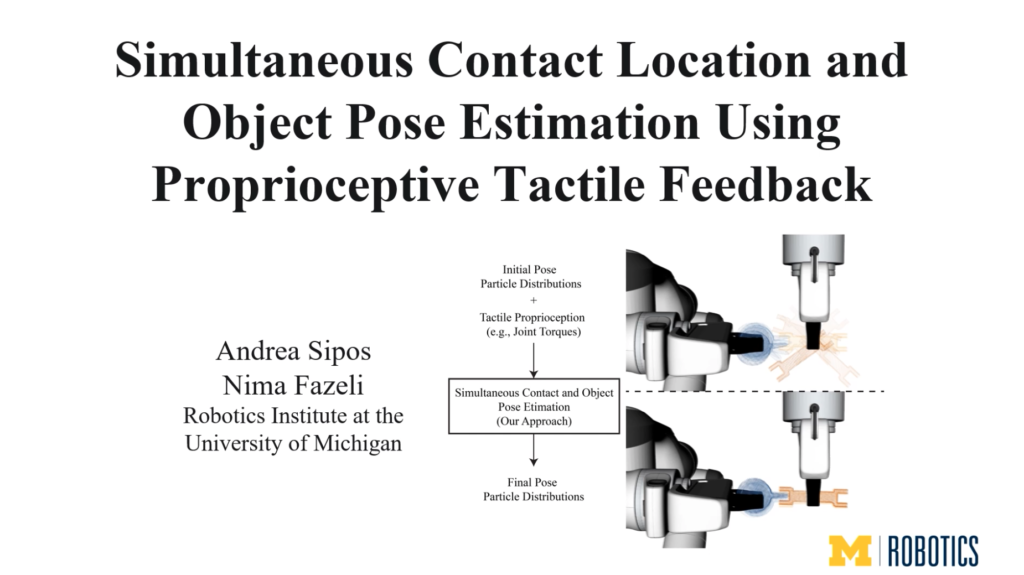

Simultaneous Contact Location and Object Pose Estimation

Click the title to read more about our work on in-hand object pose estimation. The robot uses only proprioceptive tactile feedback to estimate the pose of grasped objects by leveraging the contacts it makes between them.

Visio-Tactile Implicit Representations of Deformable Objects

Click the title to read more about our work on learning multimodal neural implicit representations capable of representing deformable objects!

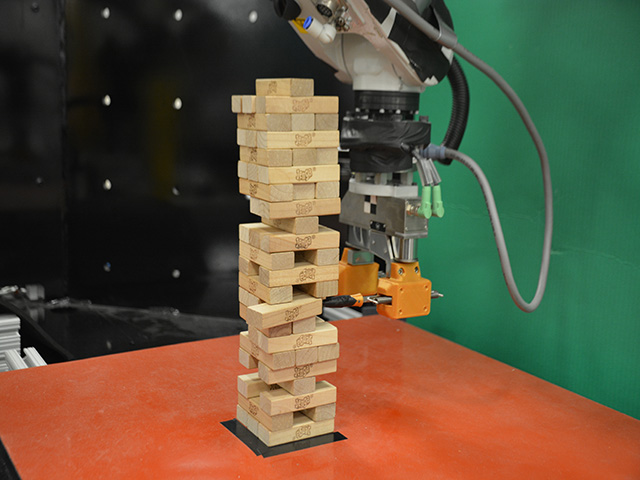

Hierarchical Learning for Manipulation

Click the title to read more about our work on learning hierarchical multimodal representation learning for complex manipulation tasks.

Blind Object Retrieval In Clutter

Click the title to read more about our work on using tactile feedback to localize objects blindly in clutter. Our robot rummages, clusters objects it touches, and picks the desired object.

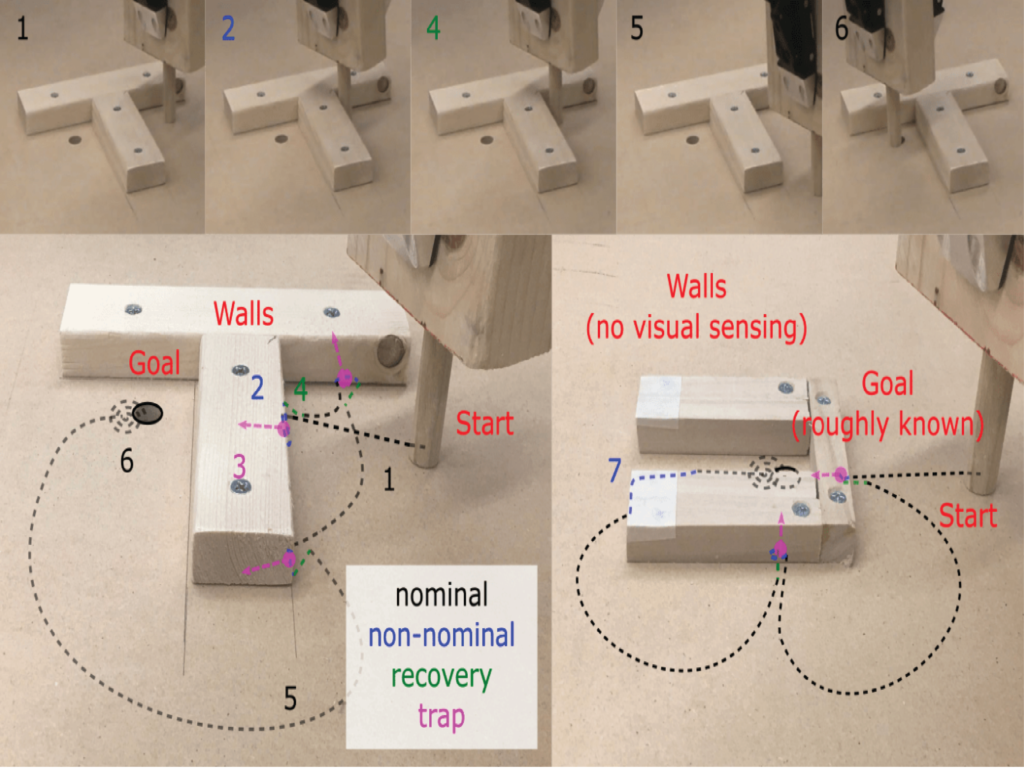

Trap Aware Model-Predictive Control

Click the title to read more about our work on using tactile feedback to blindly navigate novel environments and build trap-aware representations that help the robot achieve its goals.

Manipulation for Industrial Automation and Warehouse Logistics

Click the title to read more about our work on developing systems that are capable of autonomous picking and stowing as part of our entry to the Amazon Robotics Challenges.

Data-Augmented Models for Manipulation

Click the title to read more about our work on developing models that bring together the best of physics-based and data-driven methods.

Contact Modeling, Validation, and Inference

Click the title to read more about our work on identification and evaluation of commonly used contact models for robotics applications.

High-Resolution and High-Deformation Tactile Reasoning

Click the title to read more about our work on developing models and controllers that enable object manipulation with high-resolution and highly deformable tactile sensors.